6 Steps to Prioritize With the ICE model

Ruben Buijs

Founder & Digital Consultant

Written on Mar 12, 2024

Updated on Mar 13, 2024

13 minutes

Prioritization is an essential part of product management. You can only spend time once, so you need ensure that you are doing the right things.

In my 9 years in consulting at Accenture and Ernst & Young, I have seen a lot of companies struggle with this.

Oftentimes product managers prioritize based on gut feeling or which stakeholder has the biggest mouth (aka the boss).

Now, this is not optimal for doing business. You want to have a more objective way of determine priorities.

There are several prioritization frameworks that can help you with this.

I am planning to run through a couple of them. Share my tips and tricks with you with it such as dealing with regulatory items and Enablers. Let's dive into the first, the ICE scoring model.

Table of contents

- What is the ICE Scoring Model?

- Six steps to prioritize development work with ICE

- Benefits of ICE

- Drawbacks of ICE

- Do's and dont's of ICE

- Conclusion

What is the ICE Scoring Model?

This framework is solid for product managers who are just starting out with product prioritization. But also the more experienced ones benefit of it.

It is invented by Sean Ellis, famous for using the term “growth hacking” and helping companies experiment.

The framework helps you to make a “good enough” estimation of the priority. You have to be aware that it is not a perfect score. But, it helps you to understand which features are great and which ones you should not focus on.

ICE is an acronym for three factors, which are:

-

Impact – How much does this contribute to our goal(s)?

-

Confidence – How confident are you that this will actually work?

-

Ease – How difficult is it to implement this?

As you can see, it is an evolution of the classic impact/effort analysis. It basically adds confidence into the equation.

My experience is that you need to set some guardrails to the factors. If you don't do this, you can easily get fooled by yourself.

Let's dive into the different factors and then see how this comes together in a priority score.

Six steps to prioritize development work with ICE

1. List features, enablers, regulatory items

While it may be obvious, you need to start with listing tasks and features you might want to pick up.

Be sure to keep things a bit MECE=TIP (mutual exclusive, collectively exhaustive). For example "SSO login" and "Tagging" are on the same level. But, "Adjust color of submit button" and "Build new SaaS app" are not.

These examples may look obvious, but I have seen this a lot of people, even product managers, have difficulties with structuring items on the same level.

Make sure you spend enough time to make a decent list. It can be annoying when you are halfway prioritizing and something new is being added. I ask my customers, partners, and coworkers for their input using surveys or using software.

2. Brace for Impact

With our list, we now going to define the scores of ICE for each factor.

We start with Impact: understanding how much a feature contributes to your goal(s).

This means that you first need to set your goals if you have not yet done this. Does this mean that this score changes when you change your goals? Yes, it does!

You need to regularly review your goals and update the impact scores accordingly.

Say we have set our goals. Now what?

You need to find a way to measure the impact to your goals in a consistent fashion. I like the OKR goal-setting framework for this.

Example

Your goal is "zero world hunger" (pretty cool btw).

How can you measure the impact of an item to it?

You can think about:

-

No. people provided with safe, nutrious, and sufficient food

-

Perc. avoided harvest, transport, storage losses

Now how can you determine the impact to this goal based on your app development...? And can you reuse your method for other goals that you have. My answer: that becomes too difficult. Remember, the goal of ICE is simple and fast prioritization. It is not exact science.

There is no silver bullet or an ideal scale to determine the impact here.

Scoring impact

Using ICE, I typically use two methods to get a 1-10 scale score:

-

I determine the impact

-

We determine the impact

Which one you should apply is based on the speed you are looking for.

For the first, I think about the goal and the feature and estimate the impact myself. Simple and fast, but subjective.

For the second, you don't do it alone, you do ask some others to join you. It can be your team (maybe using some kind of planning poker), or it can be together with clients. Better thought out, but slower.

Like in Agile estimation, it can be worthwile to have a reference feature. One that you give a 5. Is this feature scoring higher or lower than the reference?

Btw. Don't go for scores like 5.87. Keep things simple. There is not much precision here, so why try to do it?

3. At Ease, Soldier

With our second factor, Ease, you want to review how difficult it is to implement something.

I regard this as the traditional effort factor. How much time does it cost to build this?

Ideally you want to set a common scale that you can use across all features. This helps you to reduce the subjective part of prioritization. Scoring ease is a bit more straightforward typically then impact.

A scale can be something like:

| Person weeks | Ease |

|---|---|

| < 1 week | 10 |

| 1-2 weeks | 9 |

| 2-3 weeks | 8 |

| 4-5 weeks | 7 |

| 6-7 weeks | 6 |

| 8-9 weeks | 5 |

| 10-12 weeks | 4 |

| 13-16 weeks | 3 |

| 17-25 weeks | 2 |

| > 26 weeks | 1 |

(Source: Itamar Gilad)

I typically suggest to use a Fibonacci sequence (1, 2, 3, 5, 8, 13, 21, 34, etc.) for the weeks/time-period mostly because larger numbers are less precise and using Fibonacci makes consensus easier. You don't even need all 10 levels of Ease to calculate meaningful scores.

Also, when you score below two (a.k.a elephant size), please, break down this big item in smaller chunks. You probably have your hands on an epic instead of a feature. There is only one way to eat an elephant, piece by piece.

Note that this scale simplifies the complexity of an item. I have seen low ease items turn into long term projects. For example a cloud migration for MS Dynamics. The client thought it was easy, in reality it took a year...

When determining ease think about the team’s capacity, capabilities, and the resources available. Take the time into account of hiring or learning new skills.

Optimistic folks (like me) think it does not take much time, but how confident are you about it? I recommend to take this into account when scoring with the Confidence factor.

4. Get Confident

With the last factor, Confidence, you want to understand if something works for sure or if it is just a guess.

With confidence, you can distinguish between items that are opinions or based on data. If you know a feature has a solid impact on your goals, it may be better than one that is based on opinions.

Like with Ease, if you have a lower score, you should act on it. Either you do some user validation interviews or a spike in order to increase your confidence.

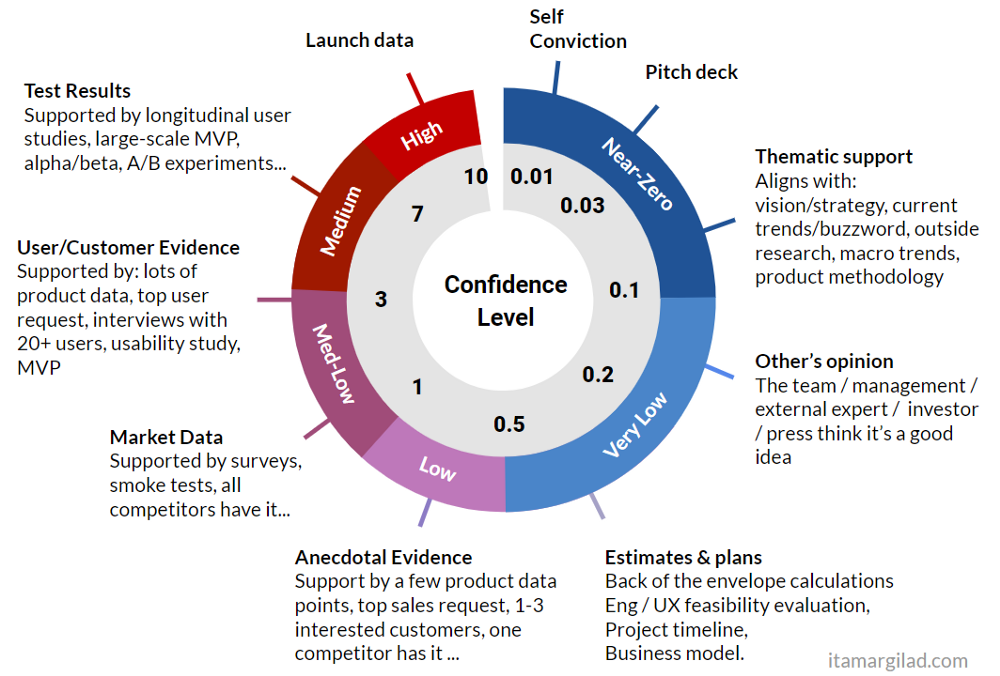

As for a scale, the confidence model from Itamar Gilad looks interesting. It distinguishes between diffferent confidence levels with explanations. But I don't completely like it, because there are only three levels providing a score above one (left top quarter).

I would watch out with scores below 1. Scoring below 1 is affecting your scores strongly, so you influence the priority too much in my opinion.

You can use a scale like this but then start from 1 and go up to 10:

(Source: Itamar Gilad)

Especially here, it can be worthwhile to involve your team. Some people may be skeptical and may have valid concerns. You can do this using something like planning poker.

Note that if your team gives wildly different Confidence scores, you need to talk about the feature more to understand concerns. Then you revote to see if things have improved until you get more agreement.

5. Calculate scores

We have listed our features, provided scores for the three factors on a scale of 1-10. Now we can start calculating.

The ICE score calculation is:

Priority = Impact x Confidence x Ease

Let's build a table of our features and then add in the figures for each factor:

| Feature | Impact [0-10] |

Confidence [0-10] |

Ease [0-10] |

Score [I x C x E] |

|---|---|---|---|---|

| SSO login | 5 | 5 | 5 | 125 |

| Tagging | 3 | 10 | 4 | 120 |

| Segmentation | 7 | 2 | 6 | 84 |

Now we have a pretty good picture, in short:

-

The Segmentation feature is the last thing we need to look at. It has a very low score compared to others.

-

SSO login and Tagging are quite close. We may need to tweak the score later on. See the next step.

Different formulas

Dealing with mathematics, there are multiple ways to go to Rome. Most folks seem to multiple the factors. While others seem to take the average.

So, is there a difference?

In short, yes there is a difference.

Below, you see the table we just had but with the two calculation methods. You can see that the average method is giving different results from the multiplying one.

| Feature | Score [I x C x E] |

Score [I + C + E / 3] |

|---|---|---|

| SSO login | 125 | 5.0 |

| Tagging | 120 | 5.7 |

| Segmentation | 84 | 5.0 |

What do we see?

-

Segmentation has gone way up, now at par with SSO login.

-

We have a different winner, Tagging, which was previously below SSO login.

How to deal with this? First of all, there is no prioritization method that is perfect. You always need to think for yourself.

Second, I would stick to a single prioritization calculation.

Third, you need to consider which scoring method you think is fair. With multiplying, you cancel out lower scores for any of the factors. While you equalze the scores more using the averaging model.

Scoring obligations/regulatory items

This is a difficult topic for most companies. I have seen a stop on business features because regulatory items needed to be done first.

For example, the accounting guideline IFRS 17 for insurers caused them to spend 100s of millions on anything remotely involved. No time for customer demands...

I have seen companies delivering little business value, because they spend too much time on security patches and updates.

So you need to take this in account. But, how do you prioritize this in ICE?

I don't believe there is a perfect way for this. Some other frameworks such as WSJF have risk-reduction that can help to prioritize this. But, not in ICE. So, how can we prioritize?

Let's understand the different factors. You can still determine Ease and Confidence, those are still the same. You should focus Confidence only on the Ease part by the way.

Then, we are left with Impact.

I use an alternative scale for Impact on these items. I assess the negative impact more from a business point of view instead.

Lastly, you should determine your risk-appetite. After a while, you cannot be on top of every patch, update, regulation, etc. So which ones are crucial for your business, and which one are not. A.k.a. how is your business impacted by them?

Scoring enablers

Enablers are piece of work that you do to support upcoming business requirements. They can be pieces of work to fasten development or improve other areas such as infrastructure or security.

The ICE framework can be difficult to apply here because what is the Impact?

I don't use ICE to determine the priority of enablers. I prioritize the features, drop them on a draft roadmap, and then understand the enablers that need to be done.

The point is that you want to deliver business value to your users, so that should be the focus.

6. Review outcomes and tweak priorities

After applying the ICE prioritization framework, it's important to review the outcomes and make necessary tweaks to the priorities. Here are some steps you can take to effectively review ICE outcomes:

-

Review your scores: Start by reviewing the scores you assigned to each idea or feature using the ICE framework. Look for any patterns or trends that emerge.

-

Re-evaluate assumptions: Take a closer look at any assumptions you made when scoring each item. Are there any new data or insights that suggest you should revise your assumptions?

-

Consider resource constraints: Look at your available resources (time, budget, team members, etc.) and assess whether you can realistically pursue the ideas or features with the highest scores.

-

Refine priorities: Based on your review and re-evaluation, make any necessary tweaks to your priorities. You may need to adjust scores or shift priorities around to better align with your goals and available resources.

-

Communicate changes: Once you've refined your priorities, communicate the changes to your team and stakeholders. This will help ensure everyone is on the same page and can work towards achieving the newly established priorities.

Benefits of ICE

The ICE prioritization framework offers several benefits to individuals or teams looking to streamline their decision-making process. By using ICE, you can avoid making decisions based on guesswork or emotions and instead prioritize tasks or features based on data-driven insights. This helps to ensure that you are focusing your time and resources on the most impactful tasks or features that align with your business goals. Additionally, the ICE framework helps to facilitate better communication and collaboration among team members by providing a standardized method for evaluating and prioritizing ideas. Overall, ICE is a simple yet effective tool for enhancing productivity and achieving better results.

Drawbacks of ICE

Now we understand the ICE framework, what do I think about it?

While there are certain benefits of it, such as simplicity, there are certain drawbacks that you should be aware about:

-

The factors are subjective. Who decides what is a 1 or a 2? We tackled this a bit with using scales.

-

All elements of the score are treated equally. One factor can drag the score down.

-

There is no build-in method for regulatory items, everything follows the same scoring model.

-

The ICE model does not take into account customer input. For that, you need to consider the RICE model. Will talk about that later, but you can Google it.

-

Scores can be subjective to change over time. So you regularly have to reset the factors and determine them again.

Do's and dont's of ICE

The ICE prioritization framework can be a powerful tool for product managers, but it's important to use it properly. Here are some do's and don'ts to keep in mind when applying ICE:

Do:

-

Start by clearly defining your objectives and goals.

-

Involve the right stakeholders in the prioritization process.

-

Use the ICE score to prioritize features or ideas, but also consider other factors such as resources, technical feasibility, and market demand.

-

Revisit and update your ICE scores regularly as new information becomes available or priorities change.

-

Communicate your prioritization decisions transparently and explain the rationale behind them.

Don't:

-

Rely solely on the ICE score to make decisions. Use it as one of several inputs in your prioritization process.

-

Ignore feedback from users or other stakeholders just because a feature or idea scored low on the ICE scale.

-

Rush through the prioritization process without giving it enough time and attention.

-

Base your ICE scores solely on your own opinions or assumptions. Use data and insights from multiple sources to inform your scores.

-

Use ICE as a one-size-fits-all solution. Different projects or products may require different prioritization frameworks or approaches.

Conclusion

Prioritizating features is hard and takes time and discipline. The ICE model helps you to make a “good enough” estimation of the priority. You are now equipped with the tools on how to do this for your product.

Finally you can share your priority outcomes with your customers and stakeholders using your product roadmap or kanban.