How to Prioritize Feature Requests: 4 Frameworks

Your feedback board has 300 feature requests. Your team can ship maybe 15 this quarter. How do you prioritize feature requests and decide which 15 to build?

If you're picking features based on gut feeling, whoever yells the loudest, or a simple vote count, you're leaving revenue on the table. You're also burning engineering time on the wrong things.

Prioritization frameworks give you a repeatable way to evaluate feature requests against each other. They won't make the decision for you. That still requires judgment. But they replace "I think we should build X" with "here's why X scores higher than Y."

This guide covers four proven frameworks, shows you how to combine them with real voting and revenue data, and highlights the mistakes that trip up most product teams.

For a deep dive into all 10 popular prioritization frameworks (including Kano, WSJF, Cost of Delay, and more), see our complete product prioritization framework guide. Not sure which framework to use? Try our framework selection guide or framework comparison.

You can't build everything. That sounds obvious, but most teams behave as if they can. They say "yes" to too many things, spread engineering thin across 20 initiatives, and end up shipping nothing well.

Good prioritization creates three outcomes:

The biggest risk isn't picking the wrong framework. It's not having one at all.

Below is a quick overview of the four most practical frameworks for sorting through a feature request backlog. Each one answers a slightly different question.

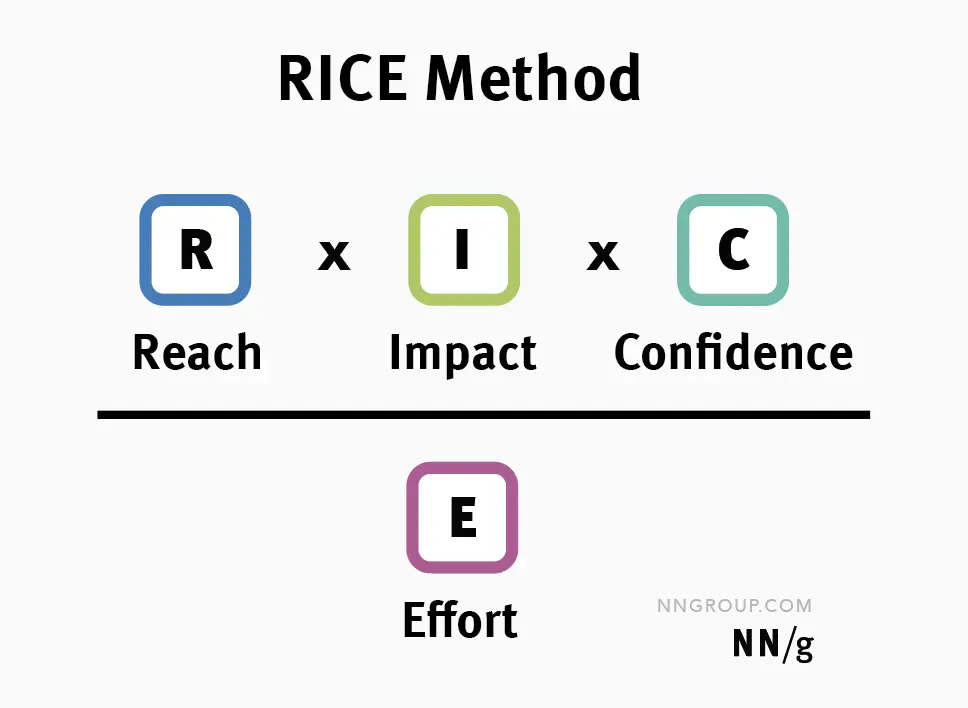

RICE scores features on Reach, Impact, Confidence, and Effort. The formula — (Reach x Impact x Confidence) / Effort — produces a single number you can rank by.

It's the best fit when you have a large backlog and real usage data. A Slack integration request with 2,000 affected users and 2 person-months of effort will clearly outscore a dashboard widget with 500 users and 4 person-months — no debate needed.

Try the RICE calculator | Full RICE guide

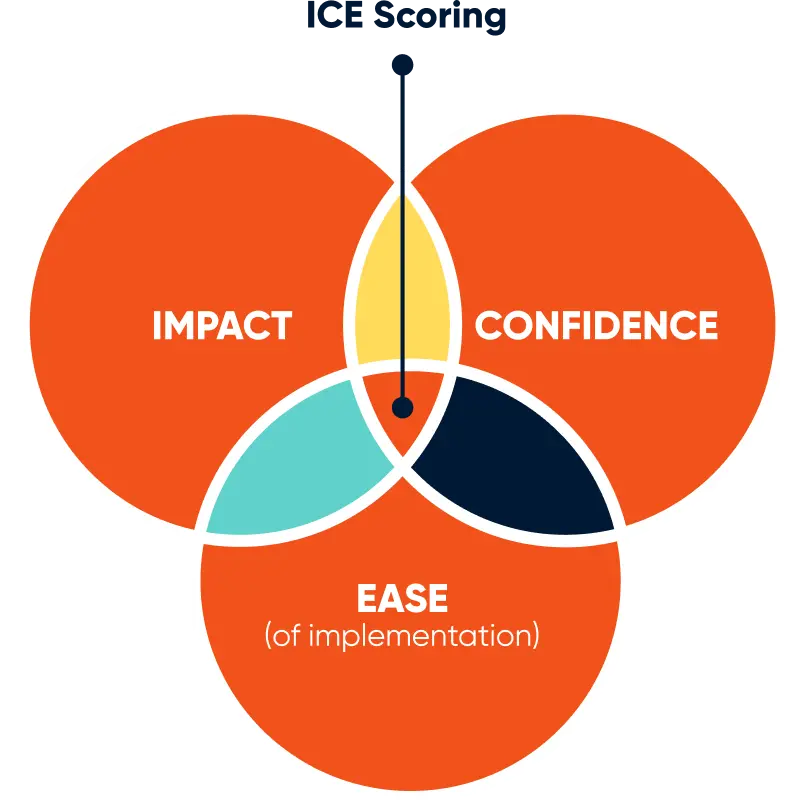

ICE is a lighter alternative: Impact x Confidence x Ease, all on 1-10 scales. No need to look up exact user counts or estimate person-months. You trade precision for speed.

Use ICE when you need to score a batch of requests quickly in a single session. The main risk is inconsistency — keep the same person or small group scoring so the scales stay calibrated.

Try the ICE calculator | Full ICE guide

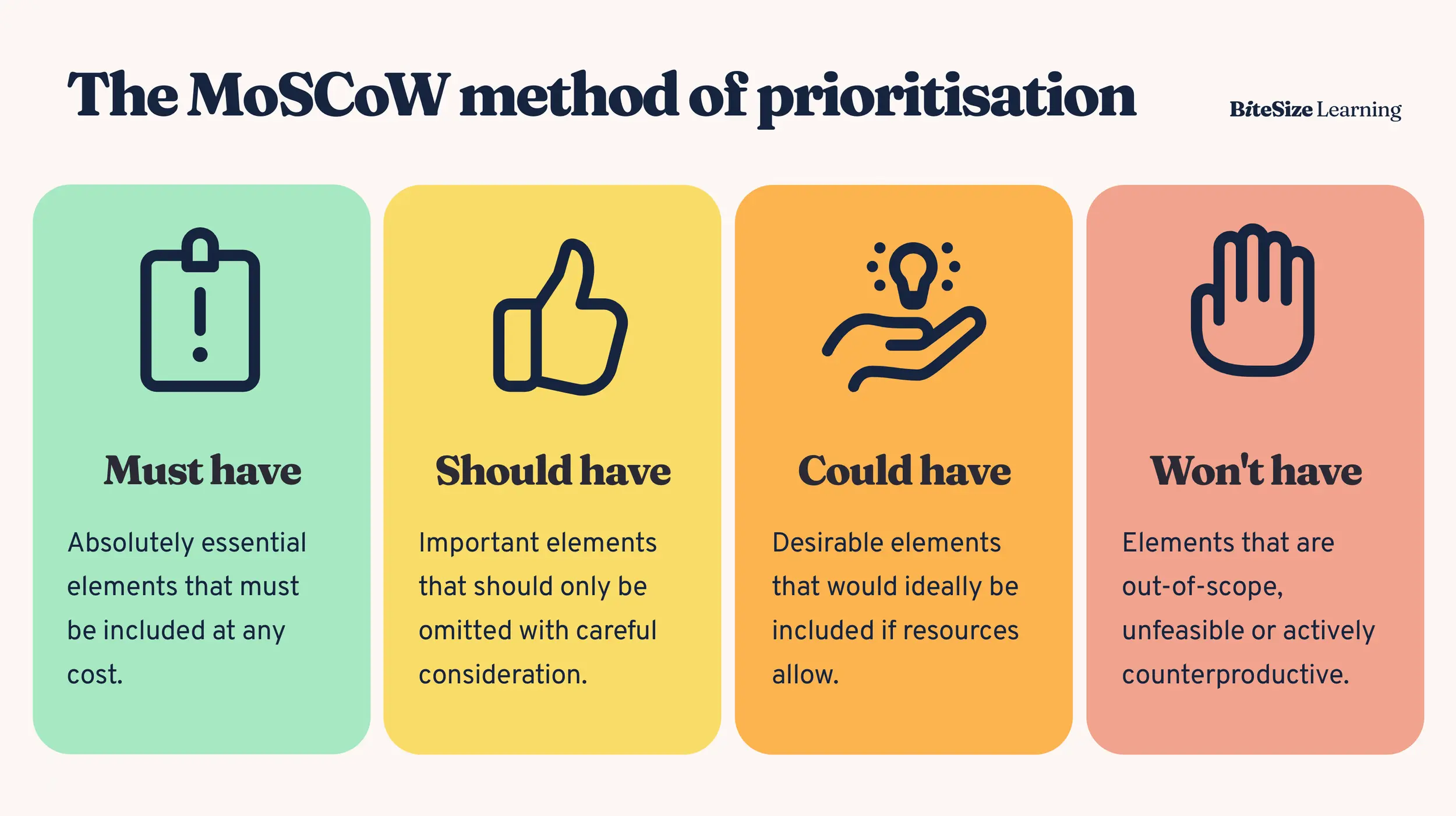

MoSCoW is a classification system, not a scoring model. You sort requests into Must Have, Should Have, Could Have, and Won't Have. It forces a binary in-or-out decision for each release cycle.

The danger is that everything becomes a "Must Have." Be disciplined: no more than 60% of items should be Must or Should. If everything is a Must, nothing is.

Try the MoSCoW tool | Full MoSCoW guide

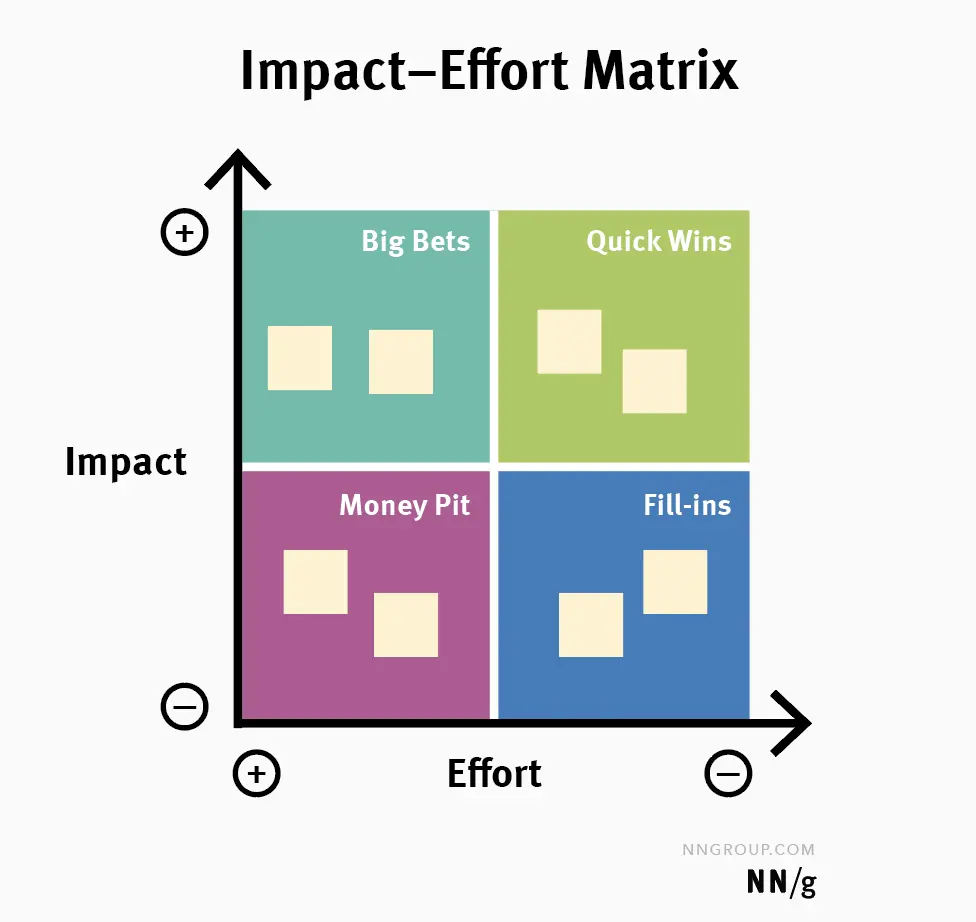

Plot requests on a 2x2 grid — high/low impact vs. high/low effort — and four quadrants emerge: Quick Wins, Major Projects, Fill-Ins, and Time Sinks. It's the fastest framework and works great for team workshops.

Use it as a first pass to separate quick wins from time sinks, then apply RICE or ICE to rank features within each quadrant.

You don't have to pick just one. Most teams layer them: score the top candidates with RICE or ICE, classify for a release with MoSCoW, and sanity-check the plan on an Impact-Effort matrix. For a comparison of all 10 frameworks and when to use each, read the full prioritization framework guide.

Frameworks give you a structured way to evaluate features, but they work even better when you feed them real data. One of the most powerful data sources is revenue.

Standard feature voting treats every vote equally. A free trial user's vote counts the same as your largest enterprise customer. That's a problem. The features your highest-paying customers need are often different from what the majority wants.

MRR-weighted voting solves this by connecting your feedback tool to your billing system. When a customer votes on a feature, their vote is weighted by their monthly recurring revenue.

Here's what that looks like in practice:

| Customer | Plan | MRR | Votes For "API Access" | Weighted Vote |

|---|---|---|---|---|

| Small Co | Starter | $29 | 1 | 29 |

| Mid Corp | Growth | $99 | 1 | 99 |

| Big Inc | Enterprise | $499 | 1 | 499 |

Without weighting, these are three equal votes. With MRR weighting, "API Access" has $627 in monthly revenue behind it. If a competing feature has 10 votes but only $290 in weighted value, you know which one moves the needle for your business.

ProductLift automatically calculates total MRR for each feature request based on who voted for it. You can import MRR data via CSV, sync it through the API, or connect Stripe directly. Once connected, every feature request on your board shows its total MRR alongside the vote count.

For example, say you're comparing two requests on your "All Posts" page:

| Feature Request | Votes | Total MRR |

|---|---|---|

| API access | 45 | $12,350 |

| Dark mode | 120 | $3,400 |

Dark mode has nearly 3x the votes, but API access has 3.6x the revenue behind it. Without MRR weighting you'd build dark mode first. With it, you can see that your highest-paying customers are asking for API access — and that's the feature that protects your revenue.

You can sort your entire backlog by MRR on the prioritization page, so the features with the most revenue behind them rise to the top automatically.

Even beyond revenue weighting, different customer segments want fundamentally different things:

Smart prioritization considers the segment, not just the vote count. A feature requested by 5 enterprise accounts worth $50K/year each is worth investigating even if it only has 5 votes on your public board.

In ProductLift, you create segments by saving user filters — for example, "Enterprise" could be all users with MRR above $500, or "Churned" could be users with a canceled status. You can combine criteria like MRR range, plan type, customer status, account age, and more.

Once segments are set up, you can use them to slice your feedback data in two ways:

Filter by segment. Show only feature requests submitted or voted on by a specific segment. For example, filter by "Enterprise" to see exactly what your highest-tier customers are asking for.

Compare segments side by side. Enable segment percentage columns on your posts page to see which segments care about which features:

| Feature Request | Votes | Enterprise | SMB | Free |

|---|---|---|---|---|

| API access | 45 | 80% | 15% | 5% |

| Dark mode | 120 | 25% | 40% | 35% |

| Mobile app | 30 | 60% | 30% | 10% |

Now the picture is clear: API access and mobile app are enterprise priorities. Dark mode is spread across segments with no strong signal from high-value customers.

You can also filter by "Churned" to spot patterns in what former customers were requesting before they left — useful for identifying retention risks before they become churn.

Combine segment data with MRR weighting and framework scoring for the most complete picture of what to build next.

No single framework is enough on its own. The best approach combines structured scoring with real user data:

This layered approach gives you both bottom-up signal (what users are asking for) and top-down structure (how your team evaluates it).

Vote count alone is misleading. A feature with 200 votes from free-tier users can matter less than one with 15 votes from enterprise accounts. Always look at who is voting, not just how many.

Enterprise customers rarely flood your public voting board. They send emails to their CSM or mention it in QBRs. Make sure those requests make it into your prioritization process even if they don't have public votes.

Deciding by committee, HiPPO (Highest Paid Person's Opinion), or "let's just see what feels right" leads to inconsistent decisions and stakeholder frustration. Pick any framework and use it consistently.

RICE scores are estimates, not gospel. A feature with a RICE score of 500 vs 480 is basically a tie. Use frameworks to separate the clear winners from the clear losers. Apply judgment for the close calls.

Customer needs change. Market conditions shift. Feature requests from six months ago may be irrelevant today. Review and re-score your backlog quarterly at minimum.

Prioritization is a team exercise. When one PM scores everything alone, their biases dominate. Get cross-functional input. Engineering provides effort estimates, sales provides revenue impact, and support provides reach.

If everything is high priority, nothing is. Effective prioritization means explicitly deciding what you won't build, not just ordering what you will. The "Won't Have" column in MoSCoW is just as important as the "Must Have."

RICE uses concrete numbers for Reach (actual user count) and Effort (person-months), making it more precise. ICE uses 1-10 scales for Impact, Confidence, and Ease, making it faster but more subjective. Use RICE when you have data. Use ICE when you need speed.

MoSCoW works best for release planning and stakeholder alignment. It forces clear decisions about what's in scope and what's not. Use it when you need to communicate priorities to non-technical stakeholders or when defining what goes into a specific sprint.

Yes, but indirectly. Let customers vote on features and submit requests. Then use their input as one signal alongside revenue data, strategic goals, and effort estimates. Customers should inform prioritization, not control it.

Use a shared scoring framework so everyone evaluates features with the same criteria. Cross-functional scoring sessions where engineering, sales, and support each contribute their perspective reduce bias and build alignment.

Review and re-score your backlog at least quarterly. Customer needs change, market conditions shift, and new data emerges. Weekly reviews of the top candidates keep your roadmap responsive without constant re-scoring of the full backlog.

Yes, and you should. Use MRR-weighted voting to surface demand, RICE or ICE to score the top candidates, MoSCoW to classify for a release, and Impact-Effort to sanity-check the plan. Each framework adds a different lens to the decision.

Doing all of this manually (collecting votes, weighting by revenue, scoring with frameworks, updating statuses) is possible with spreadsheets, but it doesn't scale.

ProductLift combines everything in one platform:

Instead of building a process from scratch, you get a system that captures feedback, helps you prioritize it, and closes the loop with customers when you ship.

Prioritizing feature requests is a skill, not a formula. Frameworks like RICE, ICE, MoSCoW, and Impact-Effort give you structure. Revenue data and customer segmentation give you context. A consistent process gives you credibility with your team and your customers.

Start with one framework, apply it to your current backlog, and iterate. The goal isn't perfect prioritization. It's prioritization that's better than gut feeling, and that gets better over time.

Ready to stop guessing? Try ProductLift free and see your feature requests ranked by real customer demand.

Join over 3,051 product managers and see how easy it is to build products people love.

Did you know 80% of software features are rarely or never used? That's a lot of wasted effort.

SaaS software companies spend billions on unused features. In 2025, it was $29.5 billion.

We saw this problem and decided to do something about it. Product teams needed a better way to decide what to build.

That's why we created ProductLift - to put all feedback in one place, helping teams easily see what features matter most.

In the last five years, we've helped over 3,051 product teams (like yours) double feature adoption and halve the costs. I'd love for you to give it a try.

Founder & Digital Consultant

Learn when to promote feature requests to your roadmap, how to merge duplicates, notify voters, and keep credibility through the full lifecycle.

See how real product teams use RICE, ICE, MoSCoW, and other prioritization frameworks. 6 practical examples with actual scores, decisions, and outcomes.

A practical decision guide for choosing the right product prioritization framework. Answer 4 questions to find the best framework for your team size, data, and decision type.

Side-by-side comparison of 10 product prioritization frameworks. Compare RICE, ICE, MoSCoW, Kano, and others on scoring type, complexity, data needs, and best use cases.

The best prioritization frameworks for startups at every stage. From pre-PMF to growth, learn which framework fits your team size, data, and speed requirements.